group.pyhelper moduleseqmatch.pyuses sequence matching to find similar text filesseqmatch_m.pyis the multithreaded version

minhash.pyuses MinHash and Jaccard similarity estimation algorithms, based on http://mccormickml.com/2015/06/12/minhash-tutorial-with-python-code/minhash_m.pyis the multiprocessing version, with shared memory access and simplified data structures (Unix only)minhash_m_init.pyis Windows-compatible; uses an initializer to preserve global variable states https://medium.com/@rvprasad/data-and-chunk-sizes-matter-when-using-multiprocessing-pool-map-in-python-5023c96875efminhash_dss.pyuses delimited search spaceminhash_v.pyis the vectorized version; the use of an initializer avoids synchronization stalls and memory bubbles with large inputs. Unix only, as changes to the NumPy array do not get carried back to the main process in Windows

- a simple heuristic can significantly decrease running time while still finding a large majority of similar files

- if the input iterator is very large, using

mapwill lead to a segmentation fault - if a function returns results faster than they can be consumed, using it with

imapandimap_unorderedwill cause a memory bubble pypy3is significantly faster thanpython3, but it did not support Numba- minimize your inputs before passing them off to a function (e.g. grouping results uses

connected_componentsfromnetworkxwhich had high space complexity) - vectorize, if possible

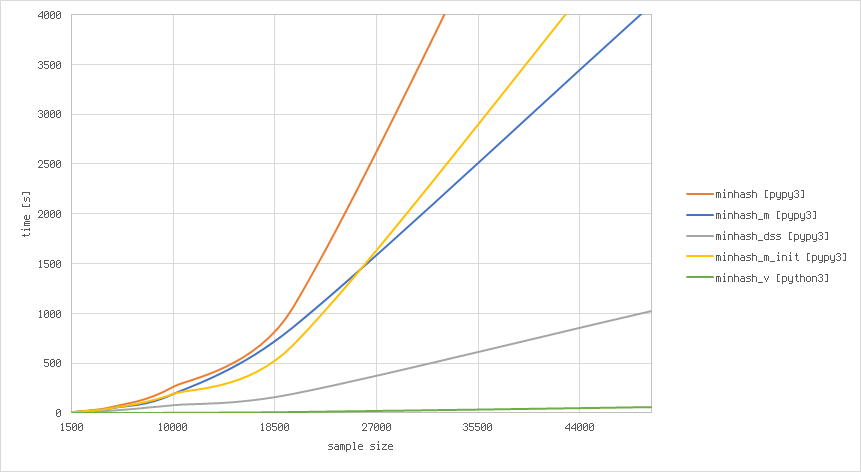

a random selection of non-duplicate text files of varying sizes (0-1767kb):

| script | interpreter | 1500 | 5000 | 10000 | 20000 | 50000 |

|---|---|---|---|---|---|---|

seqmatch.py |

python3 |

2503.13s |

||||

seqmatch_m.py |

python3 |

658.70s |

||||

minhash.py |

pypy3 |

10.52s |

67.52s |

266.74s |

1060.77s |

8278.96s |

minhash_m.py |

pypy3 |

7.87s |

51.07s |

190.73s |

859.66s |

4094.37s |

minhash_m_init.py |

pypy3 |

7.56s |

52.22s |

191.57s |

680.1s |

5070.27s |

minhash_dss.py |

pypy3 |

5.33s |

22.19s |

75.49s |

189.22s |

1019.88s |

minhash_v.py |

python3 |

3.06s |

4.19s |

6.19s |

13.51s |

59.42s |

Leave a Reply